Indiscriminate data poisoning against supervised learning: general attack formulations, robust defences, and poisonability

Javier Carnerero Cano

Abstract

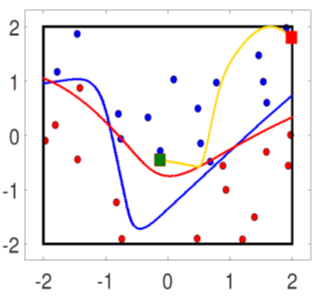

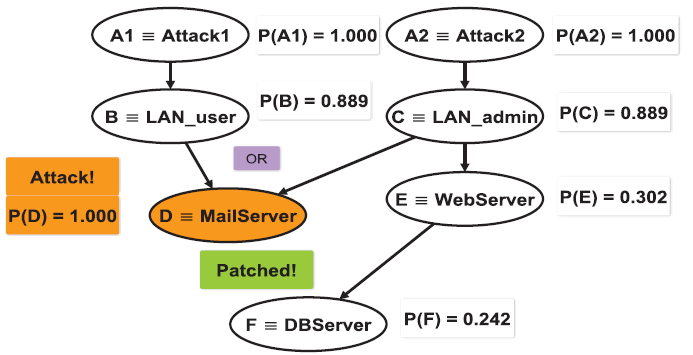

Machine learning (ML) systems often rely on data collected from untrusted sources, such as humans or sensors, that can be compromised. These scenarios expose ML algorithms to data poisoning attacks, where adversaries manipulate a fraction of the training data to degrade the ML system performance. However, previous works lack a systematic evaluation of attacks considering the ML pipeline, and focus on classification settings. This is concerning since regression models are also applied in safety-critical systems. We characterise indiscriminate data poisoning attacks and defences in worst-case scenarios against supervised learning algorithms, considering the ML pipeline: data sanitisation, hyperparameter learning, and training. We propose a novel attack formulation that considers the effect of the attack on the model’s hyperparameters. We apply this attack formulation to several ML classifiers using L2 and L1 regularisation. Our evaluation shows the benefits of using regularisation to help mitigate poisoning attacks, when hyperparameters are learnt using a trusted dataset. We then introduce a threat model for poisoning attacks against regression models, and propose a novel stealthy attack formulation via multiobjective bilevel optimisation, where the two objectives are attack effectiveness and detectability. We experimentally show that state-of-the-art defences do not mitigate these stealthy attacks. Furthermore, we theoretically justify the detectability objective and methodology designed. We also propose a novel defence, built upon Bayesian linear regression, that rejects points based on the model’s predictive variance. We empirically show its effectiveness to mitigate stealthy attacks and attacks with a large fraction of poisoning points. Finally, we introduce the concept of “poisonability”, which allows us to find the number of poisoning points required so that the mean error of the clean points matches the mean error of the poisoning points on the poisoned model. This challenges the underlying assumption of most defences. Specifically, we determine the poisonability of linear regression.