RESICS : Resilience and Safety to attacks in Industrial Control and Cyber-Physical Systems

We all critically depend on and use digital systems that sense and control physical processes and environments. Electricity, gas, water, and other utilities require the continuous operation of both national and local infrastructures. Industrial processes, for example for chemical manufacturing, production of materials and manufacturing chains similarly lie at this intersection of the digital and the physical. This intersection also applies in other CPS such as robots, autonomous cars, and drones. Ensuring the resilience of such systems, their survivability and continued operation when exposed to malicious threats requires the integration of methods and processes from security analysis, safety analysis, system design and operation that have traditionally been done separately and that each involve specialist skills and a significant amount of human effort. This is not only costly, but also error prone and delays response to security events.

RESICS aims to significantly advance the state-of-the-art and deliver novel contributions that facilitate:

- Risk analysis in the face of adversarial threats taking into account the impact of security events across cascading inter-dependencies

- Characterising attacks that can have an impact on system safety and identifying the paths that make such attacks possible

- Identifying countermeasures that can be applied to mitigate threats and contain the impact of attacks

- Ensuring that such countermeasures can be applied whilst preserving the system’s safety and operational constraints and maximising its availability.

These contributions will be evaluated across several test beds, digital twins, a cyber range and a number of use-cases across different industry sectors.

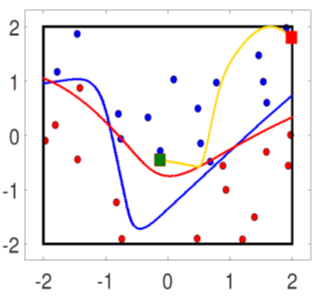

To achieve these goals RESICS will combine model-driven and empirical approaches across both security and safety analysis, adopting a systems-thinking approach which emphasises Security, Safety and Resilience as emerging properties of the system. RESICS leverages preliminary results in the integration of safety and security methodologies with the application of formal methods and the combination of model-based and empirical approaches to the analysis of inter-dependencies in ICSs and CPSs.

Funded by DSTL, this is a joint project between the Resilient Information Systems Security (RISS) Group at Imperial College and the Bristol Cyber Security Group. The work will be conducted in collaboration with: Adelard (part of NCC Group), Airbus, Qinetiq, Reperion, Siemens, Thales and Synoptix as industry partners and CMU, University of Naples and SUTD as academic partners. The project is affiliated with the Research Institute in Trustworthy Inter-Connected Cyber-Physical Systems (RITICS)

Project Publications

- E. Lupu and L.M. Castiglione. Consilience of Safety, Security and Resilience. SafeComp 2025. Position Paper.

- L. M. Castiglione, S. Guerra, E. C. Lupu, Automated Identification of Safety-Critical Attacks against CPS and Generation of Assurance Case Fragments. Safety Critical Systems Symposium SSS’25, York, 2025.

- Mathuros, Kornkamon, Sarad Venugopalan, and Sridhar Adepu. “WaXAI: Explainable Anomaly Detection in Industrial Control Systems and Water Systems.” Proceedings of the 10th ACM Cyber-Physical System Security Workshop. 2024. Awarded Best paper Award.

- Ruizhe Wang, Sarad Venugopalan and Sridhar Adepu. “Safety Analysis for Cyber-Physical Systems under Cyber Attacks Using Digital Twin” in IEEE Cyber Security and Resilience 2024.

Other relevant publications

- Maiti, Rajib Ranjan, Sridhar Adepu, and Emil Lupu. “ICCPS: Impact discovery using causal inference for cyber attacks in CPSs.” arXiv preprint arXiv:2307.14161 (2023). Under submission.

- L. M. Castiglione and E. C. Lupu, “Which Attacks Lead to Hazards? Combining Safety and Security Analysis for Cyber-Physical Systems,” in IEEE T. on Dependable and Secure Computing, doi: 10.1109/TDSC.2023.3309778. Pre-print available here.

- L. M. Castiglione et al., “HA-Grid: Security Aware Hazard Analysis for Smart Grids,” 2022 IEEE Int. Conf on Communications, Control, and Computing Technologies for Smart Grids (SmartGridComm), Singapore, 2022, doi: 10.1109/SmartGridComm52983.2022.9961003.

- L. M. Castiglione, P. Stassen, C. Perner, D. P. Pereira, G. de Carvalho Bertoli and E. C. Lupu, “Don’t Panic! Analysing the Impact of Attacks on the Safety of Flight Management Systems,” 2023 IEEE/AIAA 42nd Digital Avionics Systems Conference (DASC), Barcelona, 2023, doi: 10.1109/DASC58513.2023.10311328.