Extracting Randomness from the Trend of IPI for Cryptographic Operations in Implantable Medical Devices

H. Chizari and E. Lupu, “Extracting Randomness from the Trend of IPI for Cryptographic Operations in Implantable Medical Devices,” in IEEE Transactions on Dependable and Secure Computing, vol. 18, no. 2, pp. 875-888, 1 March-April 2021, doi: 10.1109/TDSC.2019.2921773.

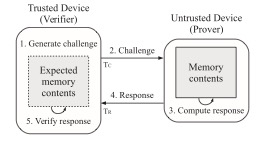

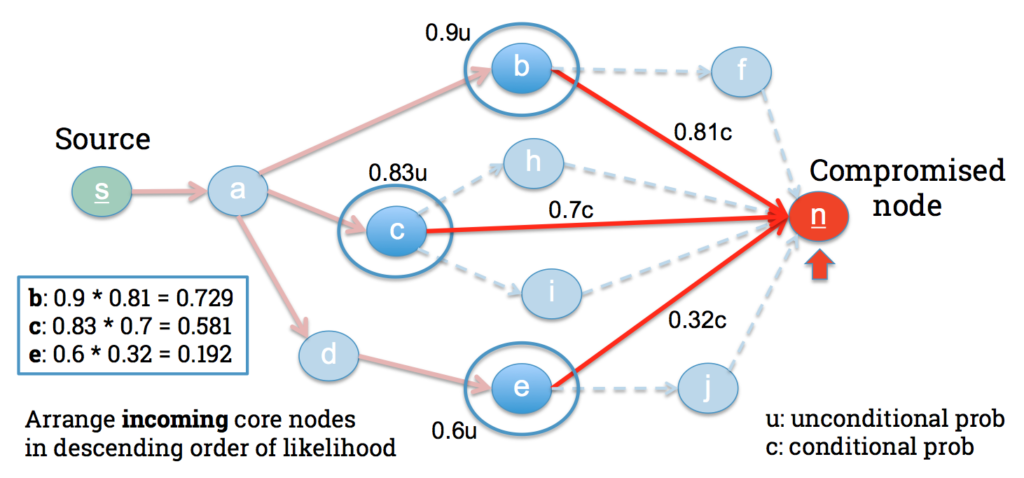

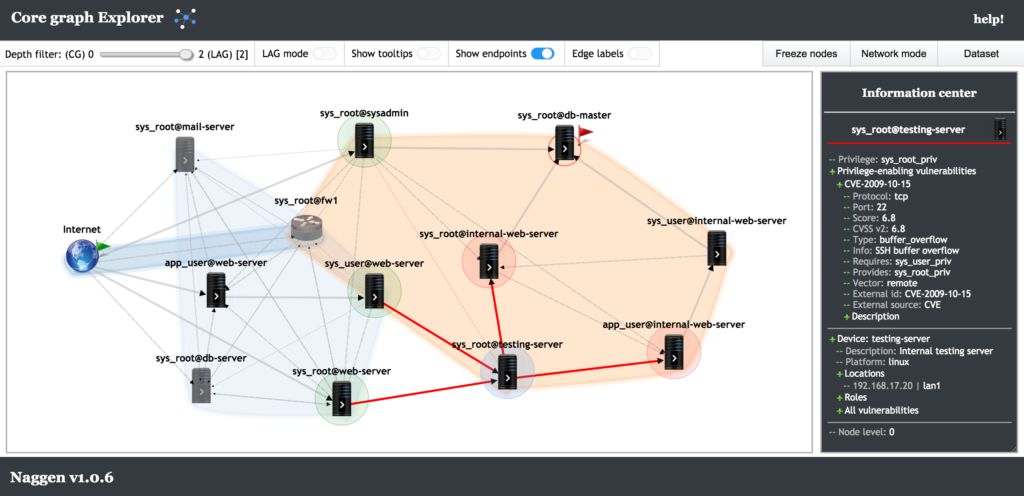

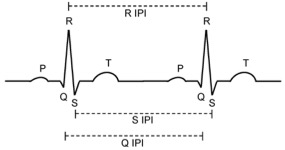

Achieving secure communication between an Implantable Medical Device (IMD) and a gateway or programming device outside the body has showed its criticality in recent reports of vulnerabilities in cardiac devices, insulin pumps and neural implants, amongst others. The use of asymmetric cryptography is typically not a practical solution for IMDs due to the scarce computational and power resources. Symmetric key cryptography is preferred but its security relies on agreeing and using strong keys, which are difficult to generate. A solution to generate strong shared keys without using extensive resources, is to extract them from physiological signals already present inside the body such as the Inter-Pulse interval (IPI). The physiological signals must therefore be strong sources of randomness that meet five conditions: Universality (available on all people), Liveness (available at any-time), Robustness (strong random number), Permanence (independent from its history) and Uniqueness (independent from other sources). However, these conditions (mainly the last three) have not been systematically examined in current methods for randomness extraction from IPI. In this study, we first propose a methodology to measure the last three conditions: Information secrecy measures for Robustness, Santha-Vazirani Source delta value for Permanence and random sources dependency analysis for Uniqueness. Then, using a large dataset of IPI values (almost 900,000,000 IPIs), we show that IPI does not have Robustness and Permanence as a randomness source. Thus, extraction of a strong uniform random number from IPI values is impossible. Third, we propose to use the trend of IPI, instead of its value, as a source for a new randomness extraction method named Martingale Randomness Extraction from IPI (MRE-IPI). We evaluate MRE-IPI and show that it satisfies the Robustness condition completely and Permanence to some level. Finally, we use the NIST STS and Dieharder test suites and show that MRE-IPI is able to outperform all recent randomness extraction methods from IPIs and achieves a quality roughly half that of the AES random number generator. MRE-IPI is still not a strong random number and cannot be used as key to secure communications in general. However, it can be used as a one-time pad to securely exchange keys between the communication parties. The usage of MRE-IPI will thus be kept at a minimum and reduces the probability of breaking it. To the best of our knowledge, this is the first work in this area which uses such a comprehensive method and large dataset to examine the randomness of physiological signals.